In this blog, we will see how to perform web scraping with the help of headless browser, from developers in our Mobile App Development Company in Bangalore

What is Headless Browser and What are its use cases?

A headless browser is a web browser without a graphical user interface.

Headless browsers provide automated control of a web page in an environment similar to popular web browsers, but they are executed via a command-line interface or using network communication. They are particularly useful for testing web pages as they are able to render and understand HTML the same way a browser would, including styling elements such as page layout, color, font selection and execution of JavaScript and Ajax which are usually not available when using other testing methods.

Use Cases

- Test automation in modern web applications (web testing)

- Taking screenshots of web pages.

- Running automated tests for JavaScript libraries.

- Automating interaction of web page

What is Puppeteer and What it can do?

Puppeteer is a Node library which provides a high-level API to control headless Chrome or Chromium over the DevTools Protocol. It can also be configured to use full (non-headless) Chrome or Chromium.

Most things that you can do manually in the browser can be done using Puppeteer! Here are a few examples to get you started:

- Generate screenshots and PDFs of pages.

- Crawl a SPA (Single-Page Application) and generate pre-rendered content (i.e. “SSR” (Server-Side Rendering)).

- Automate form submission, UI testing, keyboard input, etc.

- Create an up-to-date, automated testing environment. Run your tests directly in the latest version of Chrome using the latest JavaScript and browser features.

- Capture a timeline trace of your site to help diagnose performance issues.

- Test Chrome Extensions.

That’s it…. Now we know Puppeteer helps us to crawl a web page

We are going to crawl https://hub.packtpub.com/category/programming/ and fetch the programming news

Setting up the Environment

We need Node.js to be installed in order to use puppeteer. Steps for installing Node.js can be found here.

We will be creating a node project to achieve the scraping.

Open your terminal and cd to your fav directory.

Run this command to create a new Node project

| mkdir scrape-app && cd scrape-app npm init -y |

Open the project in your favorite code editor. I use Visual Studio code.

Install the Puppeteer package

Puppeteer comes in 2 variants

- puppeteer: When you install Puppeteer, it downloads a recent version of Chromium. that is guaranteed to work with the API.

- puppeteer-core: This version of Puppeteer doesn’t download any browser by default. It is intended to be a lightweight version of Puppeteer for launching an existing browser installation.

I will be using the puppeteer-core package for this package. Run this command in the project directory.

| npm i puppeteer-core |

Create a new file called index.js

First, Let’s import the Puppeteer library.

| const puppeteer = require(“puppeteer-core”); |

Puppeteer is a Promise based library. It performs asynchronous calls to headless chrome. So we need to define an asynchronous function to perform the duty.

First, let’s test if puppeteer is installed and working correctly.

Remember, puppeteer-core is lightweight and it doesn’t download a browser while installing the package. That is why we need to give the path of google-chrome, so that it can use the locally installed google chrome.

You can find the path of the google-chrome browser using this command.

| whereis google-chrome |

This command gives the path where google-chrome is installed. Use the same path in the code.

| executablePath: <path returned by command> |

| const news = async () => { const browser = await puppeteer.launch({ executablePath: “/usr/bin/google-chrome”, }); const page = await browser.newPage(); await page.goto(url, { waitUntil: “networkidle2” }); await page.screenshot({ path: “screenshot.png” }); browser.close(); }; |

You can run this code by executing the following command in the project directory.

| node index.js |

Wait for 2 to 3 seconds, some magic happens and a new file with name screenshot.png is created in the project directory.

You can see a screenshot of the webpage https://hub.packtpub.com/category/programming/.

Let’s move forward. Now that we’ve finished the basics of puppeteer. Let’s go to actual work. We will be crawling this section of news.

Scraping

Paste the following code in the index.js file

| const puppeteer = require(“puppeteer-core”); const url = “https://hub.packtpub.com/category/programming/”; const news = async () => { try { const browser = await puppeteer.launch({ executablePath: “/usr/bin/google-chrome”, }); const page = await browser.newPage(); await page.goto(url, { waitUntil: “networkidle2” }); await page.screenshot({ path: “screenshot.png” }); let data = await page.evaluate(async () => { let results = []; let items = document.querySelectorAll(“div.td_module_10”); items.forEach((item) => { results.push({ link: item.querySelector(“a.td-image-wrap”).getAttribute(“href”), image: item.querySelector(“img.entry-thumb”).getAttribute(“src”), title: item.querySelector(“h3.entry-title”).textContent, author: item.querySelector(“span.td-post-author-name”).textContent, pubDate: item.querySelector(“span.td-post-date”).textContent, desc: item.querySelector(“div.td-excerpt”).textContent, }); }); return results; }); console.log(data); browser.close(); } catch (e) { console.log(e); } }; news(); |

If you notice, we have wrapped our code in try catch blocks so that errors can be handled.

We’re using Puppeteer’s built-in method called evaluate(). This method lets us run custom JavaScript code as if we were executing it in the DevTools console. This method is very handy when it comes to scraping information or performing custom actions

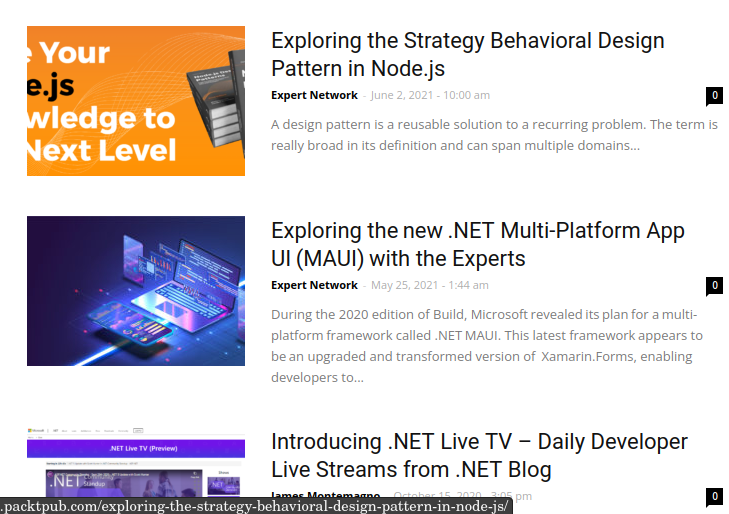

The code passed to the evaluate() method is pretty basic JavaScript that builds an array of objects, each having link, title, thumbnail image, description, author and published date fields that represent the story we see on https://hub.packtpub.com/category/programming/.

The output looks something like this.

| [ { “link”: “https://hub.packtpub.com/exploring-the-strategy-behavioral-design-pattern-in-node-js/”, “image”: “https://hub.packtpub.com/wp-content/uploads/2021/06/Mammino-DZone-highlight-218×150.png”, “title”: “Exploring the Strategy Behavioral Design Pattern in Node.js”, “author”: “Expert Network – “, “pubDate”: “June 2, 2021 – 10:00 am”, “desc”: “\n A design pattern is a reusable solution to a recurring problem. The term is really broad in its definition and can span multiple domains… ” }, // ….. { “link”: “https://hub.packtpub.com/announcing-net-5-0-rc-2-from-net-blog/”, “image”: “https://hub.packtpub.com/wp-content/uploads/2020/10/FolderTargets-0x9dBV-218×150.png”, “title”: “Announcing .NET 5.0 RC 2 from .NET Blog”, “author”: “Rich Lander [MSFT] – “, “pubDate”: “October 13, 2020 – 4:23 pm”, “desc”: “Today, we are shipping .NET 5.0 Release Candidate 2 (RC2). It is a near-final release of .NET 5.0, and the last of two RCs… ” } ] |

We had only 10 items returned, while there are more available. They will appear once we scroll down.

How to scroll down and get more data. Well, That’s a story for another blog.

+91-984-5825982 | +91-996-4689921

+91-984-5825982 | +91-996-4689921 sales@cumulations.com

sales@cumulations.com Send your requirement

Send your requirement